Grow as an AI Engineer, past the surface level.

Three practical and in-depth articles to grow as an well-rounded AI Engineer!

Hey all,

This is a short edition where I cover three key concepts that I believe AI Engineers would gain by understanding more than at a surface level.

Building projects with AI is the easy part. Many people focus on building with AI, using everything pre-packaged and ready to plug-and-play.

The AI Engineers who not only build, but also understand the other advanced components will win big.

How LLM Inference Works

As with any AI topic, it might sound too technical or too complicated at first, but that’s not the case. Once you understand the basic idea behind how models work, it’s actually quite straightforward.

A good framework is to think of Large Language Models (LLMs) as systems that are really good at predicting the next word in a sequence.

Here's a simple way to understand how they do it:

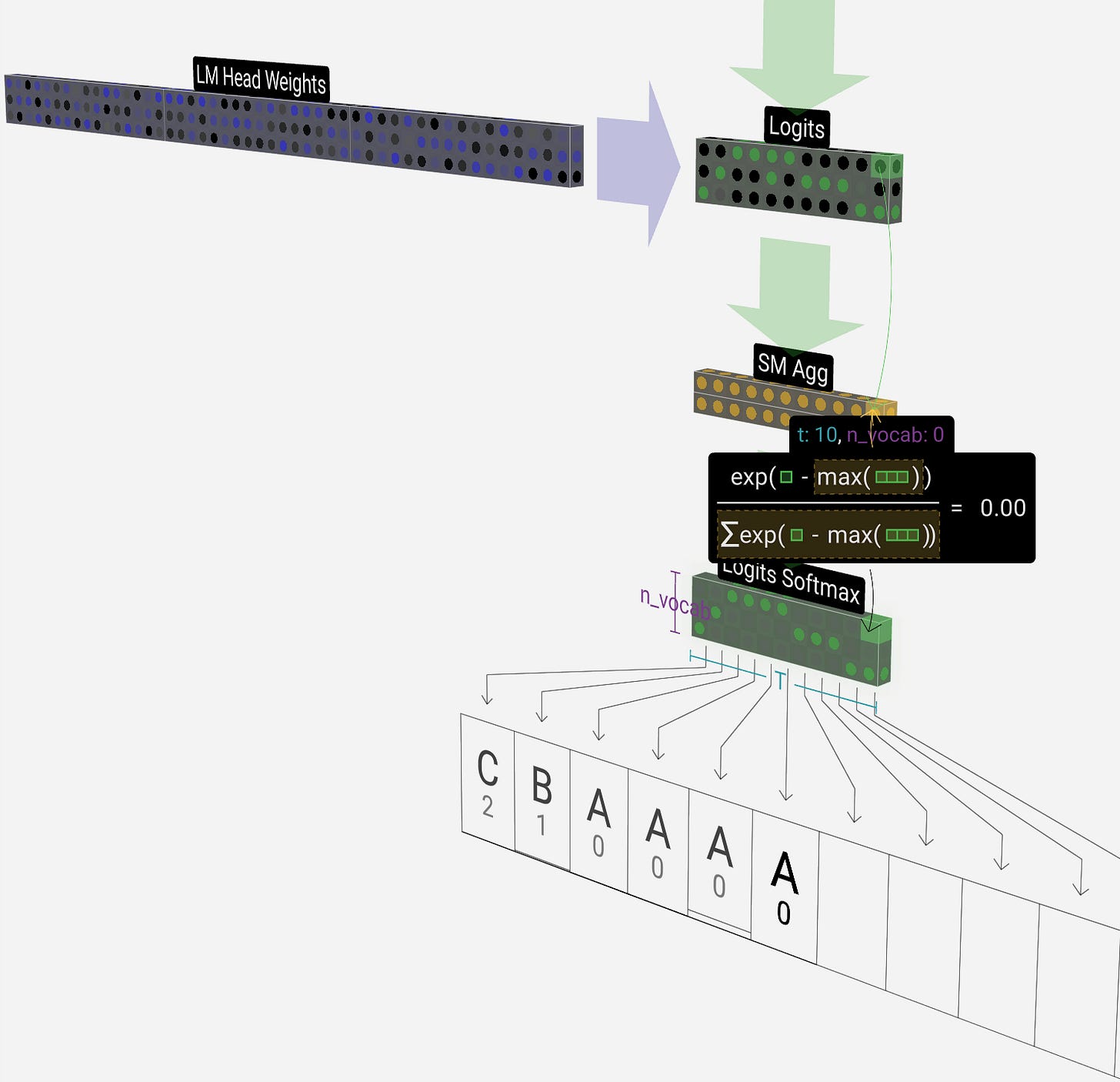

Imagine you give an LLM an input sentence, a prompt. This sentence is composed of words, which are split into tokens, with a token being a unique and atomic part of a word. As these tokens pass through the chain of Transformer layers within any LLM architecture, an internal state is computed for each token, also called encodings.

Towards the far end of the neural network, these `encodings` are aggregated, and passed through a prediction block which generates a probability score for each token in the entire vocabulary the LLM was trained with.

Going into more technicals, these raw token “encodings” go through this process:

They are "flattened out" so they can all be considered together.

Then, a Softmax Activation Function takes over which turns them into probabilities.

Each of these probabilities tells the LLM how likely it is that a specific word from its vocabulary will be the next word in your sentence. The word with the highest probability is then chosen as the LLM's prediction!

So, in essence, LLMs are constantly calculating the chances of different words appearing next, based on what they've learned from vast amounts of text.

Once you understand this simple process, it’ll open up ways and analogies to get the idea behind more complicated workflows such as MoE (i.e. LLM Mixture of Experts) or Multimodal LLMs (e.g Vision Language Models).

📔 Please see the following article to understand the full process:

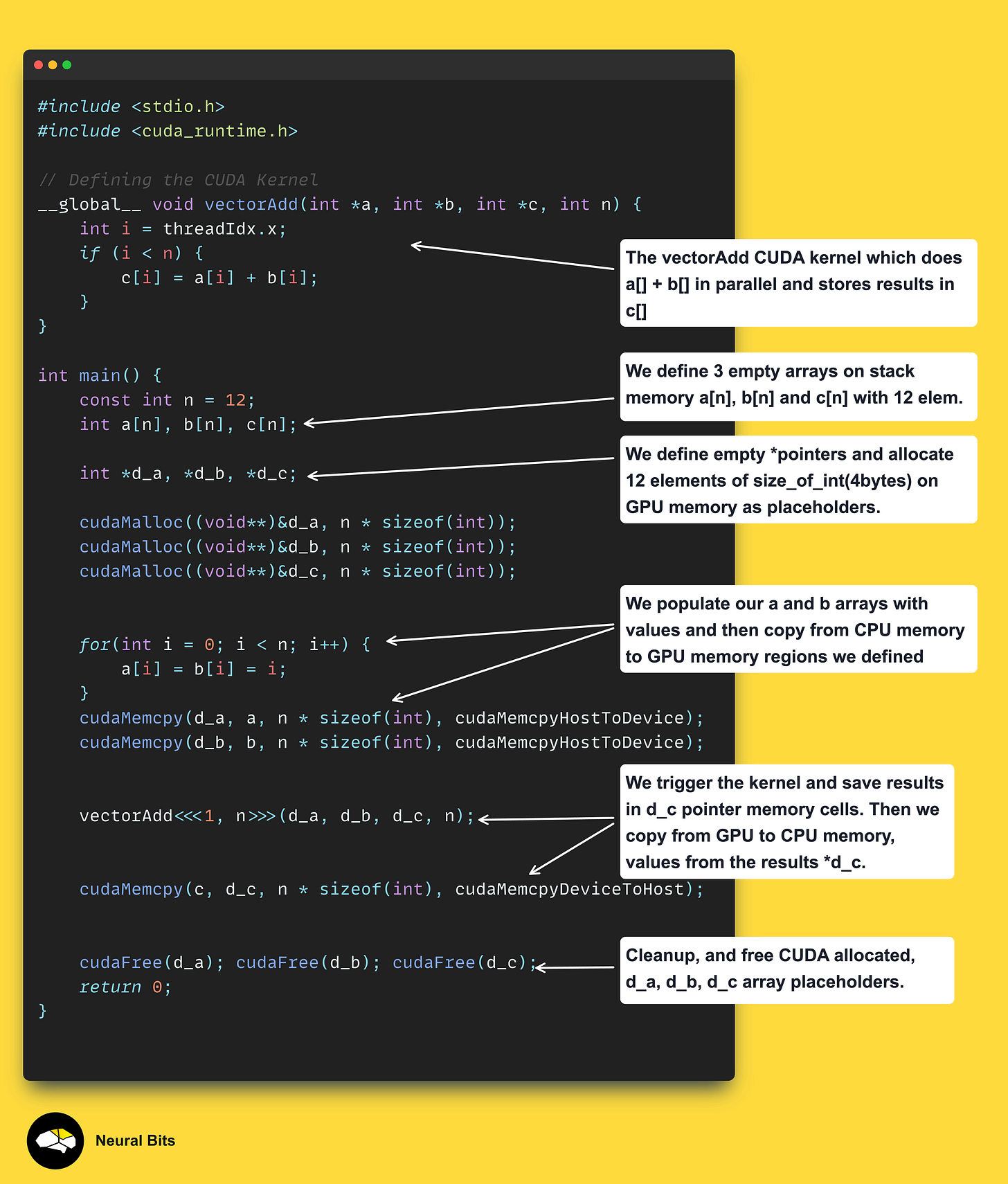

The role of GPUs in AI

You might be surprised, but a lot of resources don't really explain why GPUs are so crucial for AI. That's why I wanted to write about it!

Often, discussions about GPUs and AI get into advanced technical concepts and are usually aimed at AI experts or specialized engineers such as Deep Learning or Compiler Engineers. But I think every AI/ML Engineer should at least understand how AI uses GPUs. We've all heard of NVIDIA CUDA, but most of us just use it by typing torch.cuda() in our code without really digging into what's happening behind the scenes.

Now, you don't need to become a hardware expert. You don't need to memorize the specifics of CUDA cores, Tensor Cores, HBM (High Bandwidth Memory), or SMs (Streaming Multiprocessors). Knowing every tiny detail about the hardware probably won't help you much in your day-to-day AI work.

However, I strongly believe that having a high-level understanding of:

How AI training and prediction (inference) use GPUs.

What kernels are (these are the fundamental programs that run on GPUs).

And how these kernels are programmed (again, at a high level).

On that note, I’ve written the following article, that I strongly believe will answer many question you might have or have had about GPU Programming and its importance in AI.

📔 In this article you’ll learn the fundamentals of GPUs, CUDA, Kernels and more:

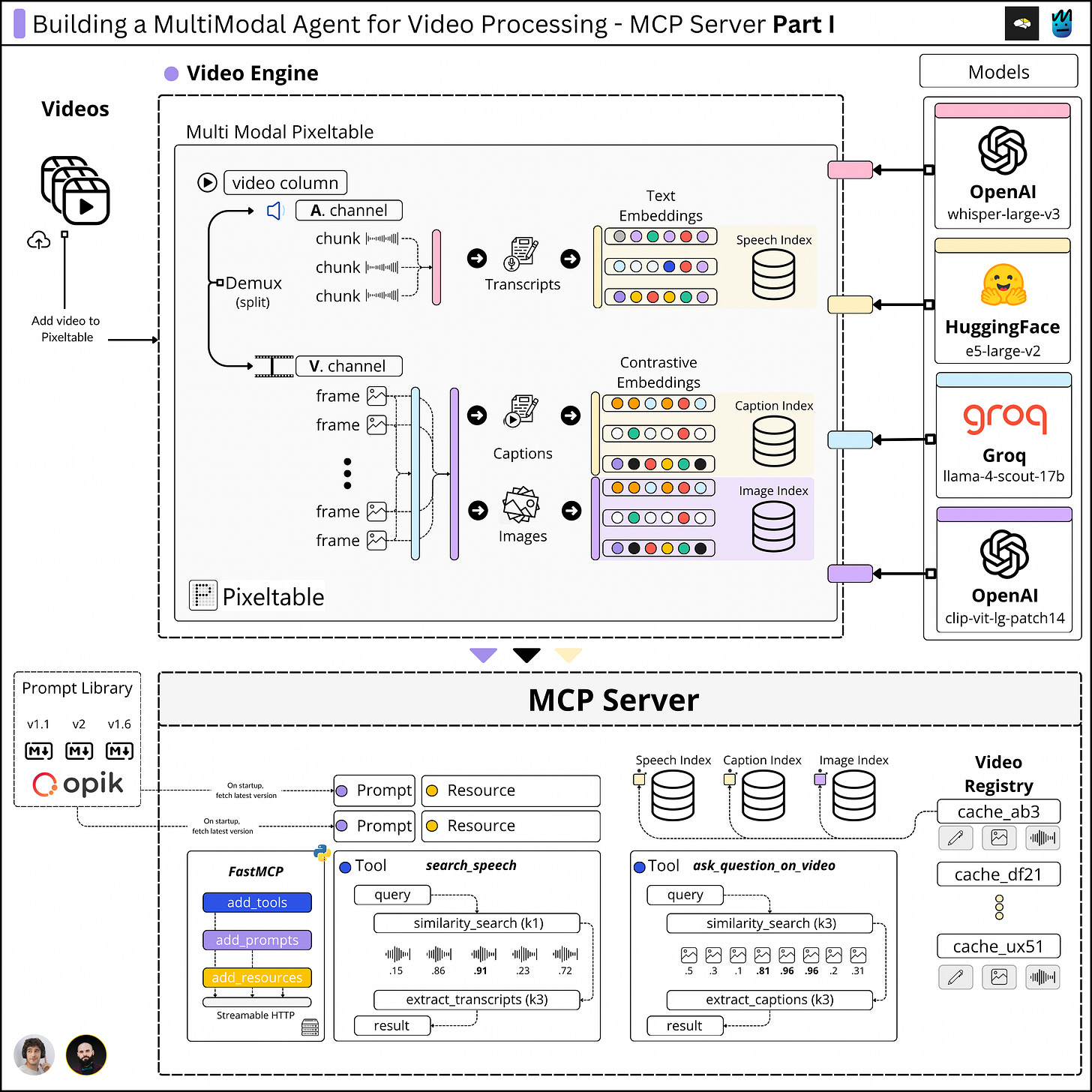

Understanding Model Context Protocol

At first, LLMs were mainly perceived as Chatbot applications. In the past few years we’ve seen a boom in LLM model variations, with different parameter sizes, architectures and context lengths.

Further, with instruction-following, function-calling and the new agentic workflows - the industry started to build tools and framework to standardize these fast evolving concepts.

The Model Context Protocol (MCP) is a direct result of that. MCP is an open standard and framework developed by Anthropic to standardize how LLMs, interact with external tools, systems, and data sources.

However, when writing about MCP I didn’t want to focus on the basics that can be found anywhere and at large. I went one level further and unpacked the Protocol, Transport, RPC Messages and other core components to learn and understand every moving piece.

📔 Please see the following article to learn the more advanced components of MCP

New Collab - Free AI Course

I’ve hinted at this before, 🤩 and recently joined forces with Miguel Otero Pedrido to bring you an open source course that covers:

Multimodal Data - audio, video, images.

Agents - tool calling, routing, memory.

MCP - we’ll build a complex one.

Vision Language Models (VLMs), Speech to Text (STT) and more.

Monitoring, LLMOps and more.

📌 Here’s a snippet of what we’re going to build!

Stay tuned for the first lesson, its coming soon!

Stay tuned for the next week!

All images are created by the author, if not stated otherwise.