MLOps at Big Tech and why 51% of AI projects fail to reach production.

The state of AI Adoption in 2024. The 4 and 5 level MLOps maturity models. Two practical methods to integrate MLOps principles in your team/company.

Abstract

Even though ChatGPT was released in November 2022, 2023 was the year the world discovered Generative AI, as organizations already foresee material benefits from gen AI use, reporting both cost decreases and revenue jumps.

This also spiked the AI adoption rate worldwide, as per an analysis done by McKinsey & Company [1]:

In addition to the Adoption rate, the financials also state the same trend:

In another study done by VentionTeams™ [2], regarding the details & challenges of AI adoption across various industries, they’ve reported quite interesting metrics, stating that :

[1] Over 80% of businesses have embraced AI to some extent, viewing it as a core technology within their organizations.

[2] Alongside the surge in AI adoption, financial commitment to AI has also amplified. In 2018, only 40% of organizations actively using AI devoted over 5% of their digital budgets to it, but in 2023, that number jumped to 52%.

Going further, if we inspect the top 5 driving factors of AI adoption as presented by the IBM Global AI Adoption Index [3] we notice:

43% - Advancements in AI that make it more accessible

42% - Need to reduce costs and automate key processes

37% - The increasing amount of AI integrated into standard off-the-shelf business applications

31% - Competitive pressure

31% - Demands due to the COVID-19 pandemic.

Moreover, as per statistics on AI Adoption Challenges, we note that:

76% of leaders struggle to implement AI

54% of AI pilot projects reach production

56% of firms mention data quality as a major challenge

18% of businesses lack a strategy

Taking all of these into consideration, you might ask one of the following questions:

If so many businesses see the potential of AI, what’s stopping them from adopting it?

Why do only half of AI projects reach production?

Why do so many companies mention data quality as a challenge?

And so on

If the potential is there, the proof of value is there, the peer pressure and the trend go up - what’s the challenge from a development standpoint?

The key detail in posing this question is that many companies see the potential, but don’t necessarily acknowledge the complex processes underneath when managing an efficient AI system, from business analysis up to deploying the AI-powered solution.

In this article, we’ll focus on that problem and cover the concept of MLOps processes, as recommended by Big Tech (e.g. Google, Amazon, IBM, etc).

Table of Contents:

What is MLOps in a few words

Not using MLOps

Why MLOps is different than DevOps

MLOps processes

AIOps, DLOps, Model & Data Ops

MLOps at Big Tech and Maturity Models

How to achieve the right level of MLOps

How to integrate MLOps

Conclusion

What is MLOps in a few words

MLOps [4], as presented in the paper, stands for Machine Learning Operations and is a core function of Machine Learning engineering, focused on streamlining the process of taking machine learning models to production and then maintaining and monitoring them.

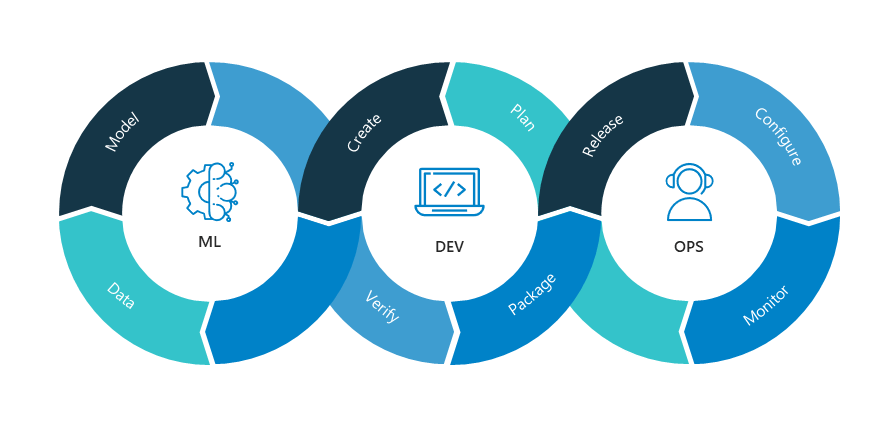

Inspired by DevOps and GitOps principles, MLOps seeks to establish a continuous evolution for integrating ML models into software development processes.

MLOps applies these principles to the machine learning lifecycle, with the goal of:

Faster experimentation and development of models.

Faster deployment of models into production.

Quality assurance and end-to-end lineage tracking.

Auditability and reproducibility across the entire workflow

The distinction between the ML Team’s roles and collaboration

Not using MLOps - Challenges

Depending on the scale of your ML workflows, a fully integrated MLOps development mindset can save companies from challenges in the long term while building AI products.

Let’s iterate over a few:

Lack of reproducibility - as ML models are data-driven and can change their behavior based on different parameters set, it is mandatory to be able to reproduce, especially when different versions of data, code, and models are used.

Poor Collaboration - similar to standard software development processes where different teams work on their part of the project or workflow, MLOps aims to ensure a common framework for teams (ML, Dev, Ops, IT, Business) to work together with clear roles, responsibilities, and processes.

Difficulty in Scaling - as the number of models and deployments increases, managing them without MLOps becomes increasingly complex. MLOps helps automate and streamline these processes, making it easier to scale operations.

Compliance and Data-Model Governance Issues - ensuring compliance with legal requirements and standards is more difficult without MLOps. If a company serves an ML solution to a client, and the client requests an audit, the company should be able to provide a detailed report.

Delayed Time-to-Market - due to the dynamic nature of ML development and iteration, a lack of automation and standardization can lead to delays in deploying models to production, which often costs.

Data and Model Tracking - pointing on the same topic of auditability, the lack of automated versioning and lineage of raw data → features → model → deployment can impose great risks, especially under the new EU AI Regulation Act.

Why MLOps is different than DevOps

One frequent question that everybody asks, especially engineers coming from the software engineering side, is how MLOps compares to DevOps.

MLOps and DevOps look similar but they focus on different aspects of the development process.

DevOps focuses on streamlining the development, testing, and deployment of traditional software applications.

MLOps builds upon DevOps principles and applies them to the machine learning lifecycle. It goes beyond deploying code, encompassing data management, model training, monitoring, and continuous improvement, adding stronger assurance that they provide real business value.

While MLOps leverages many of the same principles as DevOps, it introduces additional steps and considerations unique to the complexities of building and maintaining machine learning systems.

MLOps Processes

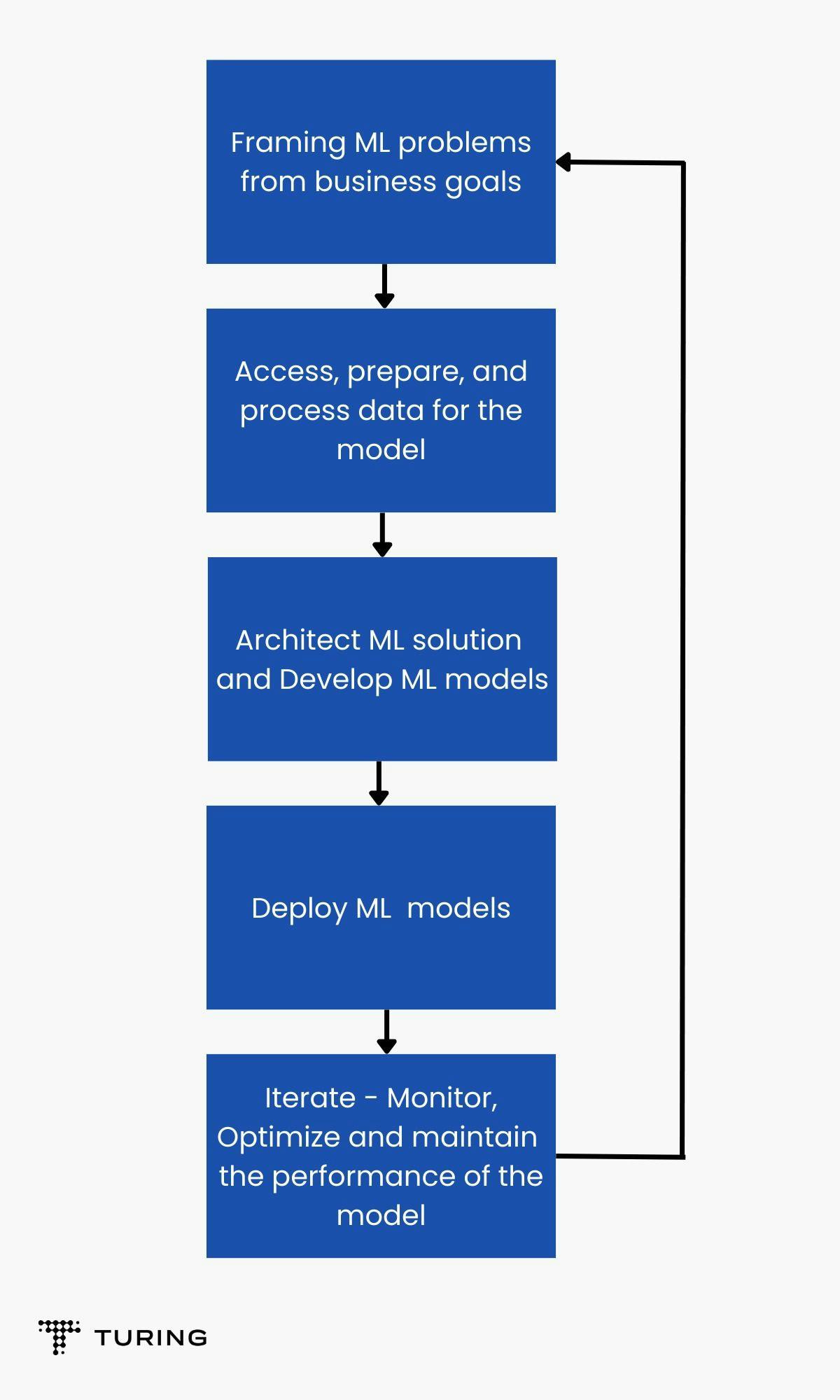

BigTech umbrella companies define MLOps concepts, workflows, and toolings differently, but within these definitions, they fall along the same set of core principles for MLOps.

The span of MLOps in machine learning projects can be as focused or expansive as the project demands. In certain cases, MLOps can encompass everything from the data pipeline to model production, while other projects may require MLOps implementation of only the model deployment process.

A majority of enterprises deploy MLOps principles across the following:

Exploratory data analysis (EDA)

Exploring and understanding data that will be used to train ML models.

Involves tasks such as Data Visualization to identify patterns and Data Cleaning to remove corrupt and erroneous samples.

Data Prep and Feature Engineering

Creating new features from raw data that are relevant to model training processes.

Involves transforming and formatting the raw data for model training-ready formats.

Model training and tuning

Training and optimizing ML models on feature-engineered data.

Involves selecting the right ML algorithms, training ML models, tuning and optimizing hyperparameters, and evaluating the model on test data against specified metrics.

Model review and governance

Analyzing and ensuring the ML models are developed and deployed responsibly and ethically.

Involves model validation to meet the desired performance, model fairness, and interpretability to explain and understand ML models and security.

Model inference and serving

Deploying trained ML models to production and making them available for use by applications and end-users.

Involves Model Serving, selecting the right Infrastructure and resources, and building the appropriate monitoring system.

Model monitoring

Continuously monitoring the performance and behavior of the model in production.

Involves tracking metrics, scanning for model drift, and identifying overall model issues like bias, overfitting, or underfitting.

Automated model retraining

The feedback process involves re-training on fresh data once the performance of the model degrades.

Involves triggering automated training pipeline, training, and evaluation processes.

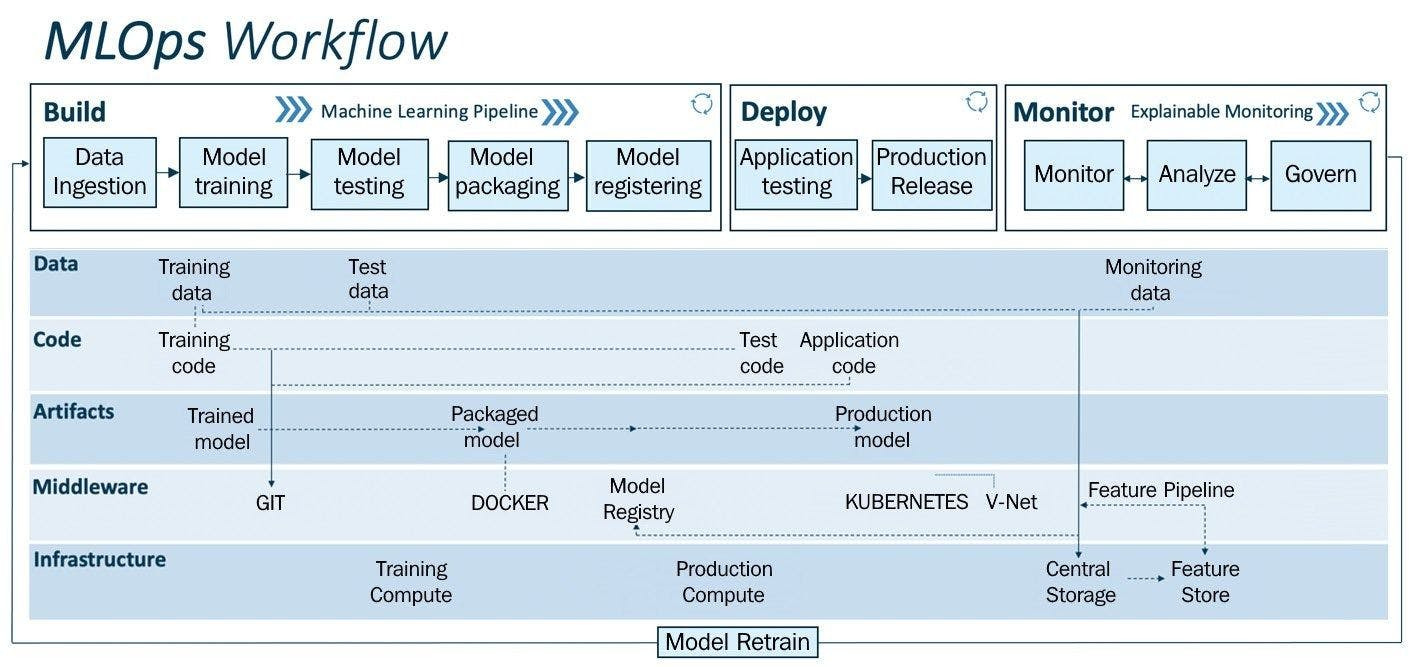

Google MLOps Automated ML Pipeline [5] Google MLOps

AIOps, DLOps, Model Ops and DataOps

Don’t get lost in a forest of buzzwords that have grown up along this avenue. The industry has coalesced its energy around MLOps.

AIOps is a narrower practice of using machine learning to automate IT functions. One part of AIOps is IT operations analytics or ITOA. Its job is to examine the data AIOps generate to figure out how to improve IT practices.

DataOps and ModelOps refer to the people and processes for creating and managing datasets and AI models, respectively. Those are two important pieces of the overall MLOps puzzle.

Interestingly, every month, thousands of people search for the meaning of DLOps.

They may imagine DLOps are IT operations for deep learning. But the industry uses the term MLOps, not DLOps, because deep learning is a part of the broader field of machine learning.

Despite the many queries, you’d be hard-pressed to find anything online about DLOps. By contrast, names like Google and Microsoft have posted detailed white papers on MLOps.

MLOps at BigTech and Maturity Models

When discussing “Levels of MLOps”, the 2 main maturity models that circulate consist of 4 and 5 stages.

Below is the summarized 4-stage MLOps maturity model that companies like Google, Databricks, and IBM propose in their description of MLOps:

This model presents a short overview to help companies understand their MLOps integration level covering 4 levels of automation. It helps set a common mindset on understanding the stages where ML processes can be automated by describing each component shortly.

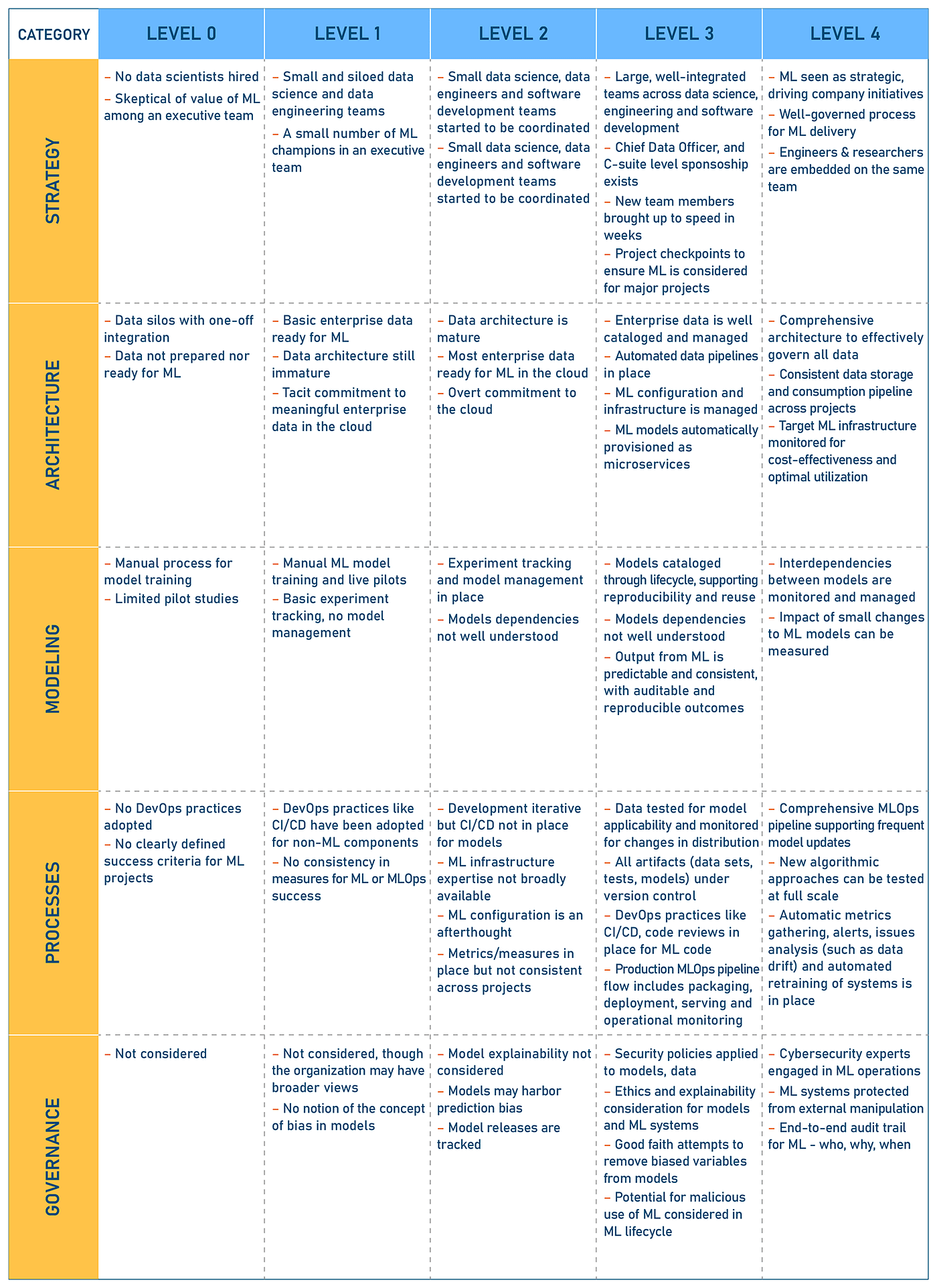

A more detailed model, composed of 5-stages of MLOps Maturity is the one proposed by Microsoft, where they expand on the previous model adding more details and insights:

The Microsoft model goes one step further and proposes role-based responsibilities, by splitting each level into People, Model Creation, Release, and Application Integration categories

Below is an example of these details for Level 0 - No MLOps, across these categories:

For details on Levels 1-5 of the Microsoft MLOps model, see the Azure MLOps Guide [6]

Bonus: A more extensive model, encompassing insights across Strategy, Architecture, Modelling, Processes, and Governance, the MLOps Maturity Model proposed by GigaOm [7] could be helpful, see it below:

How to achieve the right level of MLOps

Achieving the highest MLOps level isn't always necessary or practical.

The optimal level for your organization depends on its specific needs and resources.

MLOps represents a shift in how organizations develop, deploy, and manage machine learning models, offering a comprehensive framework to streamline the entire machine learning lifecycle. It ensures that data is optimized for success at every step, from data collection to real-world application.

There isn’t a one-size-fits-all model for MLOps; instead, companies should aim to set up processes that best fit their workflows and align with their release schedules.

For instance, a company like Netflix, which works with highly dynamic data and needs to frequently update its recommender models due to the constant addition of new content, may require a higher level of MLOps automation and integration to keep up with its development and release demands.

The success of MLOps hinges on a well-defined strategy, the right technological tools, and a culture that values collaboration and communication.

How to integrate MLOps - 2 Methods

If you’re an ML Researcher or ML Engineer, and your company is at Level 0 of MLOps Automation, you could start by integrating principles across your team.

The following advice is based on the techniques I’ve used and found helpful throughout my experience.

⭐ Very Basic Approach:

Since your team already works with ML models, you could start by researching and proposing improvements on parts of your team’s workflow. For this particular use case, let’s focus on versioning and lineage.

For example, you could manually define lineage files for your data to model workflow, after you’ve trained an ML model. For that, you can:

Create a empty .yaml or .json file to describe the lineage/parameters and metadata and attach it either to your model deployment or version it on git.

In this file, you could add:

Path to the dataset (version if you have it)

Path to EDA charts or analysis if done

Metadata on model type and hyperparameters

Date when the model was trained and details on the data distribution used

Targed classes or model outputs

JIRA ticket or EPIC

Here’s a basic example of a Deep Learning model for Image Classification:

core:

model_name: [CLIENT_NAME]_[MODEL_TYPE]

task: CNN_ImageClassification

backbone: mobilenet_v2

loss_func: BCE

optimizer: Adam

learning_rate: 0.0001

input_shape: [224, 224, 3]

output_shape: [1]

finetuned: true

is_optimized: False

sprint_epic: ML230

version: 1

data:

dataset_version: custom_inhouse_enhanced_44k

is_proprietary: True

last_train: 2021-07-01

stage: prototype

dataset_analysis: [PATH_TO_DOCUMENTATION_PAGE]Having these files attached to the model, you could infer the dataset used, what the purpose of the model is, which hyperparameters were used, and more. Remember that this is a very scratch-the-surface approach, which will still cover at a high level, the explainability and observability concepts of MLOps, apart from versioning.

⭐ Advanced Approach:

This approach refers more to the subject matter expert side, where you aim to introduce and propose MLOps Integration across multiple teams, where you have to align with every stakeholder and address multiple issues and concerns at once, to reduce friction and not face push-back from the teams where they already have their custom workflows.

Here’s a broad overview focusing on practical steps you could take, based on the approach I’ve found useful myself:

Assess the Landscape: Seek to understand the current Data and ML workflows within your company, chat with the ML Team Leaders or Project Managers, and aim to identify gaps and processes that could be automated with the lowest friction.

Analyze the Workflows: Based on the details you’ve gathered, sketch flow diagrams where you treat each use case separately. Then note common patterns each team is following, from data preparation to model training and deployment.

Tracking Systems: Evaluate how the teams track data, code, and experiments. Identify if they use Confluence, JIRA, or custom files for documenting datasets, models, and parameters. Examine how they monitor ML experiments and inspect metrics.

Defining ADRs: For each section of MLOps Principles, from data collection, processing, model research, model training, and deployment, define an ADR (Architectural Decision Record) template, and fill in the details, your goal being to document planning concepts.

Within the ADR, present the current workflow, challenges, and problems you’ve identified from steps 1-3.

Present target challenges you aim to solve with the integration based on what you’ve identified at a.. Here’s an example of an ADR for MLOps Integration

Solution Design and POC: Based on ADRs, start the solutioning process, where you research and propose a tool for each component (e.g., Prefect for Data Pipelines, Kubeflow for ML Pipelines, W&B for Experiment Tracking, Terraform for IaC). Sketch solution diagrams, update ADRs, and construct a high-level plan to build a Proof of Concept (POC).

Stakeholder Update: Remember that your main goal is to improve efficiency, automate, reduce costs, and ease the workflows that ML teams follow, providing a standardized and future-proof plan to streamline the deployment of new models or model updates.

Conclusion

In this article, we’ve covered a 2024 snapshot of AI adoption worldwide, based on statistics from IBM [3], McKinsey & Company [1], and VentionTeams™ [2]. We went over the challenges of AI adoption, saw that only half of AI pilot projects reach production, and went into covering the concept of MLOps and maturity models presented by Big Tech.

We’ve pointed out the MLOps principles, processes, and plans to achieve and integrate MLOps in your company or team workflows, and presented 2 practical methods to start integrating MLOps principles, from basic data-model lineage up to Architectural Decision Records (ADRs) for multiple stakeholders.

References

Link | Title | Year of Publishing

[1] McKinsey & Company, State of AI Adoption, 2024

[2] VentionTeams™, AI Adoption Statistics Snapshot, 2024

[3] IBM Global AI Adoption Index, The IBM AI Adoption Index, 2023

[4] MLOps, The MLOps Paper, 2022

[5] Google MLOps, Google’s proposal of an automated CT ML Pipeline, 2024

[6] Azure MLOps Guide, Azure ML Documentation, 2024

[7] GigaOm, Delivering on the MLOps Maturity Model, 2020

Very interesting. Thanks for the article