The Smartest AI Engineers Will Bet on This in 2026

A no-BS breakdown of where to invest your time, backed by real industry insights.

2025 has been the year of LLMs and reasoning models pushing toward agents and agentic workflows.

That’s why I’ll spend 2026 focused more on system design, architecture, scale, inference, and core engineering fundamentals.

We now have a wide range of models to choose from, cheaper inference, and plenty of guidebooks on how to prototype with LLMs, from RAG pipelines to basic workflows and agents. Getting something working is no longer the hard part.

What’s still hard is running these systems in production.

Across the industry, only a small number of teams have managed to move beyond pilots and demos. And when systems fail, it’s rarely because of the model itself. It’s the engineering around the model: how systems are designed, monitored, tested, and improved over time. These are the same problems software teams have always faced, but made harder this time, due to the non-deterministic behavior of AI Systems.

In 2026, the most valuable work is learning how to build AI systems that hold up under real usage. New abstractions will come and go, including agents, but they only create value when they sit on top of well-designed, maintainable systems.

This article is about that gap.

What you’ll get from this article:

What are the 2025 MIT, BCG, and Gartner reports on AI saying?

Where should an AI Engineer invest their time?

How to avoid confusion and hype cycles?

Actionable plan for engineers, AI Foundations, Engineering, and Systems.

AI Adoption is Moving Slower

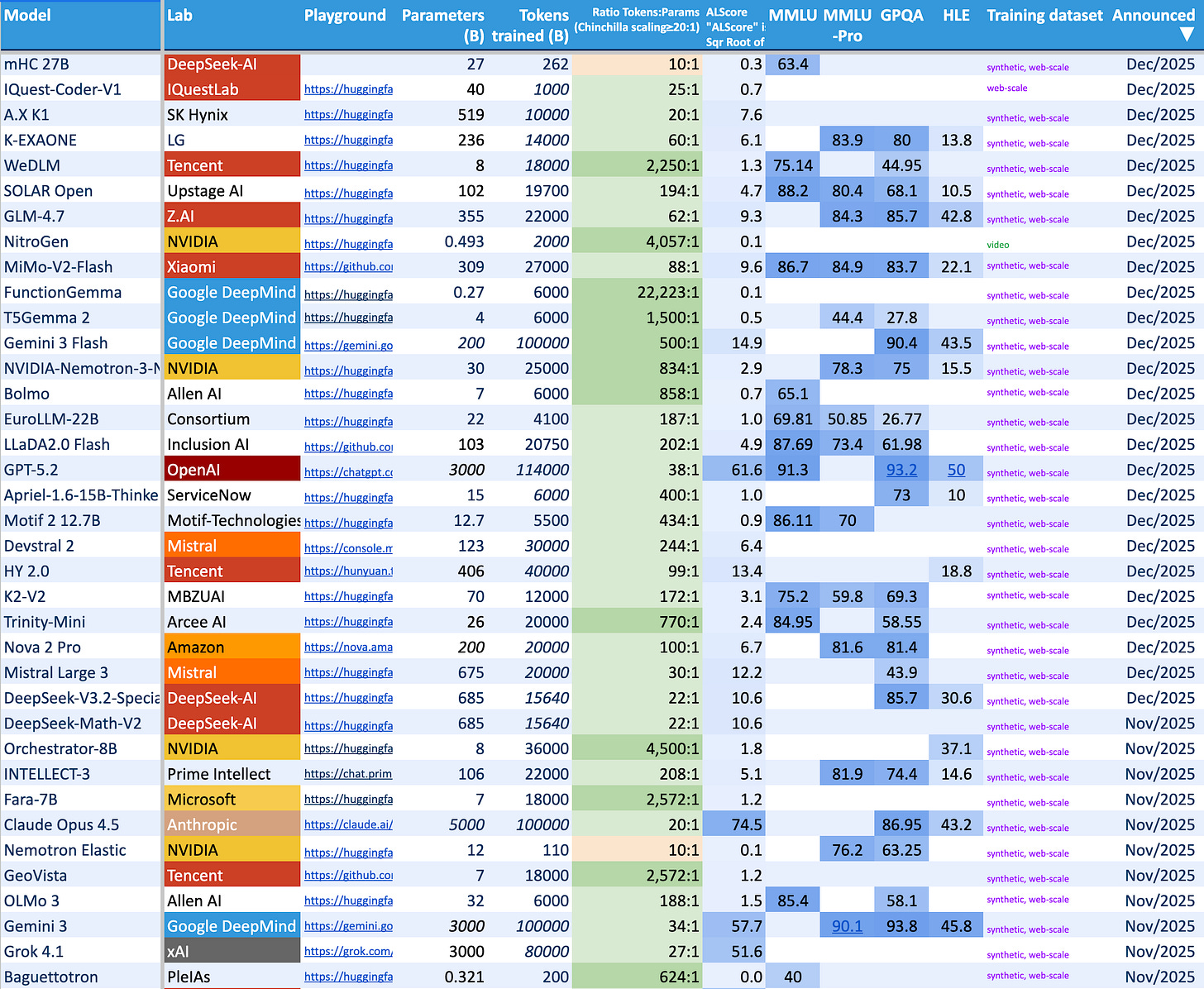

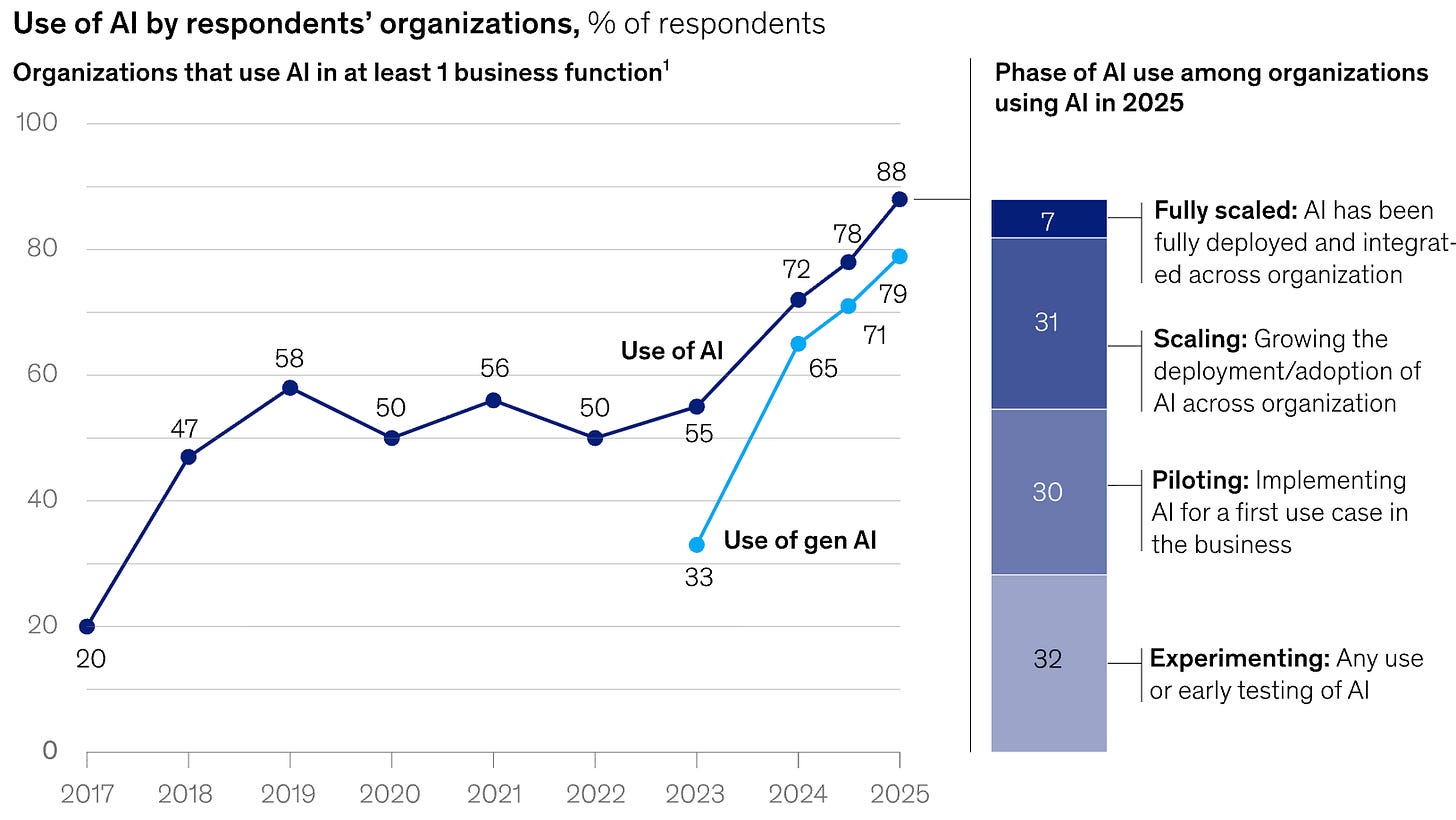

Reading through McKinsey’s 2025 [12] survey, turns out that even if almost 88% of companies use AI in at least one function of their business, nearly 60% of them are still stuck in the research and experimentation.

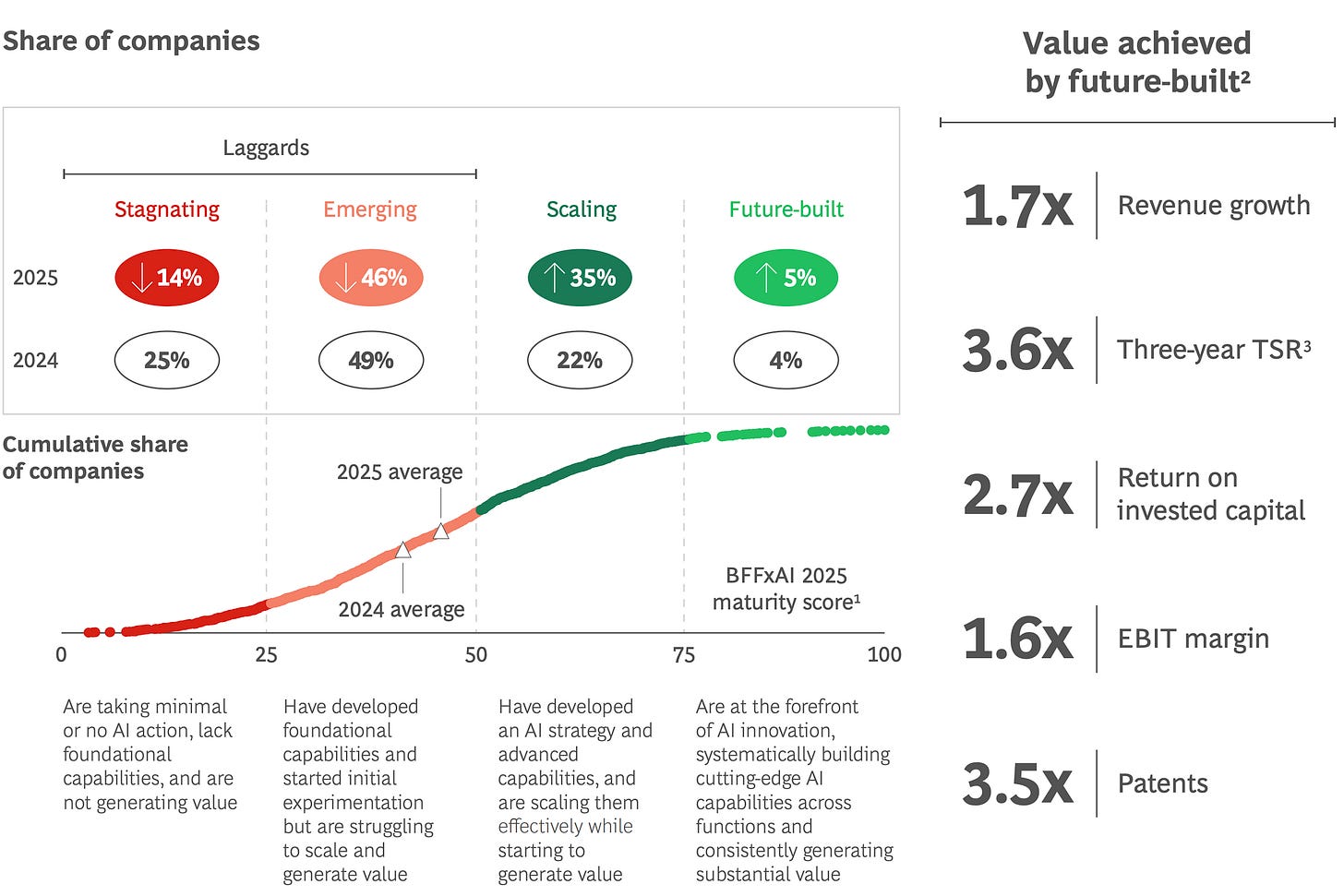

Similarly, the BCG’s [8] research shows only 5% of firms are “future-built” AI leaders, seeing significant bottom-line value; these are the infra-owners, the frontier-AI labs, and large companies that use AI as a backbone.

They have the talent, compute, resources, and leverage to do so.

Three concrete examples:

Google - massive search context, default user behavior, and existing distribution to build the “AI Mode” on Google Search.

Perplexity - one of the first to add source citations baked directly into answers.

NVIDIA - seasoned talent, vertical integration, chips → simulators → models and large-scale real and synthetic data on multiple modalities (i.e., NVIDIA Isaac Sim, Omniverse, Nemotron).

On the other side, 60% report minimal or no gains from AI; these are the laggards. Even though the models became cheaper per generated token, and the industry caught up on Cookbooks and some Best Practices to follow when building with AI, you’d think building and scaling would be easier.

What does that mean?

Most of the companies won’t have an “AI-first” strategy, but an “Integrate AI” strategy. That isn’t new, as it’s been that way for the past two years.

That means, we’re entering into the Scaling phase, where PoC’s must become resilient AI Systems running in production.

In the following chart from the Boston Consulting Group report, we can see the median average moved a bit in 2025, compared to last year, but still, most companies are stuck in-between Emerging and Scaling phases.

If we zoom out on this infographic, the 60% composed of the Laggards in the first two columns, are moving from no interest in AI, to building foundational capabilities.

That doesn’t cover the “using AI models” part, but getting up to speed on talent, system design at scale, and business functions, which can be translated as:

“Understanding” - people learn how AI and its components work

“Designing” - the business functions where AI could act as leverage

“Building” - the core components and experimenting

What does that mean for an AI Engineer?

A note to end this section on is that the best placement for an engineer in this phase, and going through the next year’s advancements, is learning scale and bringing value out of an AI System.

The Engineers who can operationalize, scale, and measure AI systems will be more valuable than those who can prototype with new models.

An AI Engineer’s Focus

For most engineers, unless you’re working in applied research (frontier labs) or high-performance scaling (inference providers, GPU kernels), the job isn’t about pushing models forward.

It’s about making systems work reliably.

A year ago, I wrote about why MLOps fails in production and why nearly half of projects fail to scale into real value. At the time, the discussion was framed around classical Machine Learning and Deep Learning: models, engineering, pipelines, and deployment workflows.

The names have changed since then, but the problem hasn’t. (Read it Here)

We might’ve switched from ML Engineering to AI Engineering, from Deep Learning to Generative AI - but the problem is still there. Most of these systems don’t require AI as a backbone; we’re far from fully autonomous agents, where the autonomy slider is set to maximum.

They do require solid engineering, however.

There is a big difference between “AI everywhere” and “AI delivering value everywhere”, even if full-agentic sounds good and trendy.

Here’s a clearer signal to enforce the above. ↓

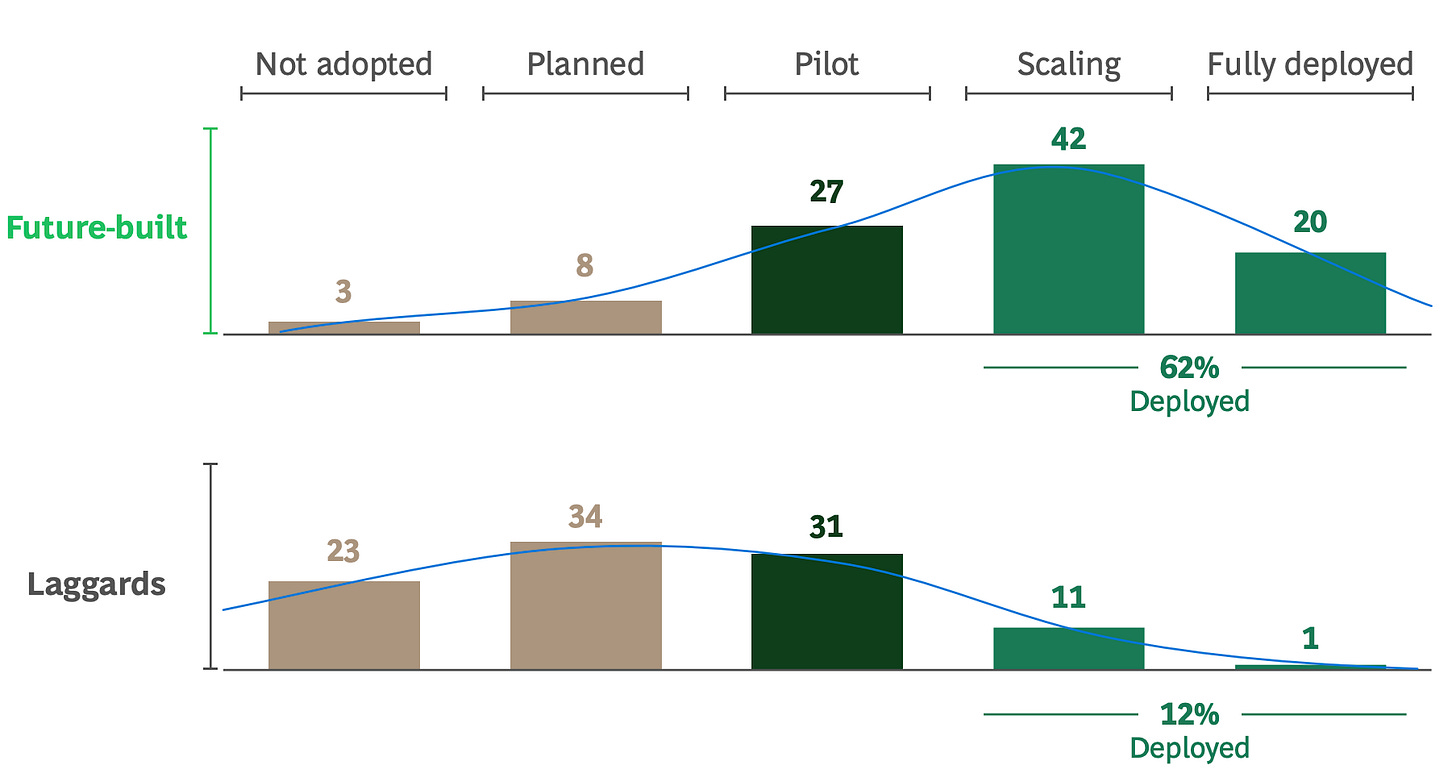

There’s clearly an execution gap. Projects don’t fail because of models, but because they can’t reliably move from pilot to production. This idea goes back to 3-4 years ago, when MLOps was a hot topic of discussion in the AI community.

The features are there, the models are there, but the pipelines, monitoring, detecting model drift, golden sets, and continuous improvement and calibration are lacking or not designed properly for production.

This leads to the following question: “How does an AI engineer position themselves?”

Before answering that, it’s worth grounding ourselves in the hype cycles - because they explain why some people are fully invested in AI, while others are exhausted by the headlines.

Avoid the Unnecessary Confusion

It’s funny how AI feels like living inside contradictory headlines:

“Agents are here” and then “This is the decade of Agents” (A.Karpathy)

“AI writes all the code” and then “We’re hiring Senior Engineers” (Microsoft)

“AI will replace Software Engineers in 6 months” (D.Amodei)

These statements aren’t mutually exclusive, but seeing them side by side, and especially seeing them everywhere, creates a kind of fatigue. I think that’s probably one reason many experienced engineers don’t rush headfirst into fully AI-driven approaches. A few conflicting claims, from people in the field, are often enough to make someone pause and wait for clearer signals.

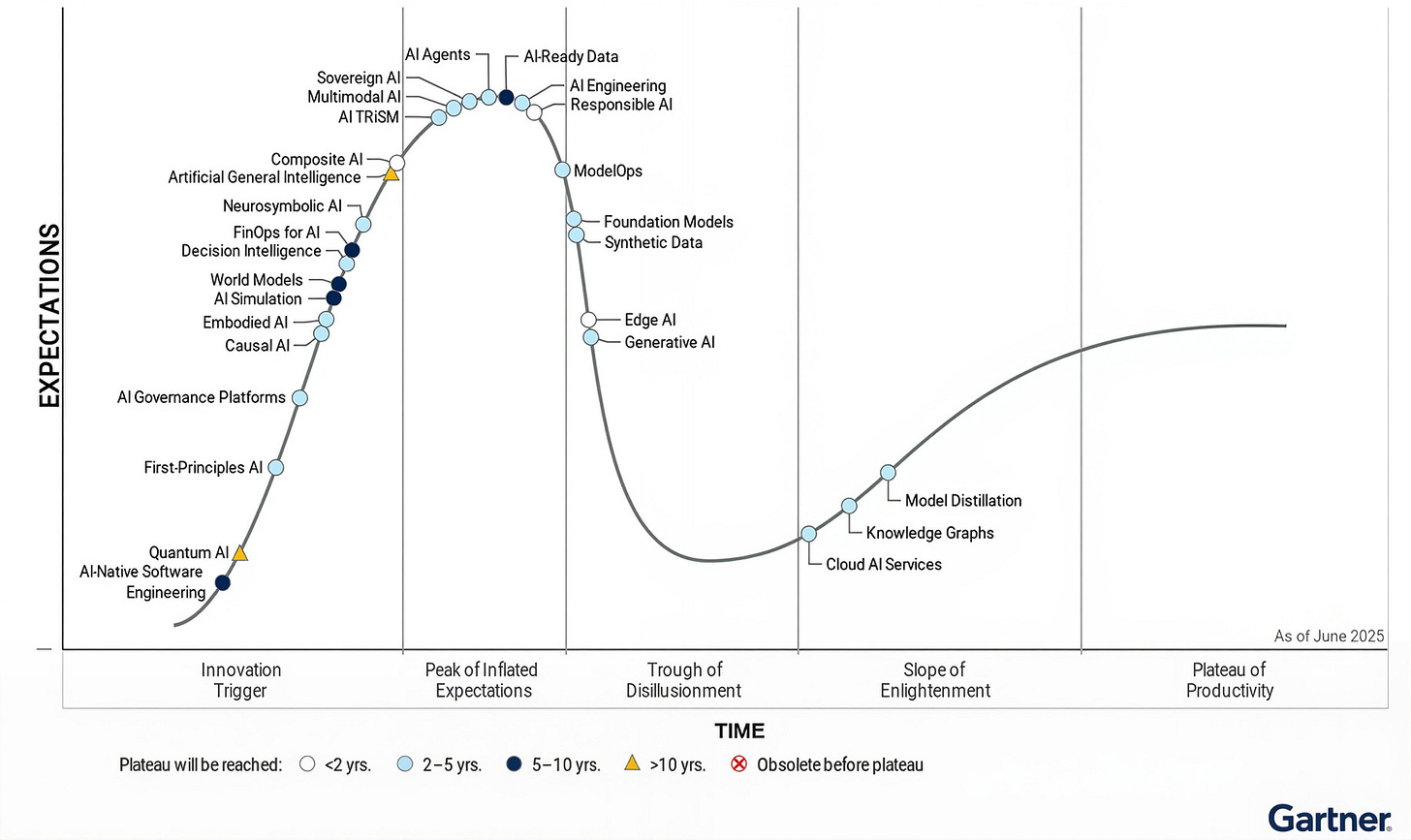

And then, there’s this chart, which was very popular last year ↓

This is the Gartner Hype Cycle chart, which helps businesses understand when to invest in emerging tech, mapping unrealistic hype against real-world value over time, with cycles often taking 3-10 years.

Engineers who’ve built real systems tend to be skeptical by default, usually taking the slower path: try the tools, see where they help, notice where they fall apart, and only then decide where they fit.

If you’re a Software Engineer, Data Engineer, Data Scientist, ML/MLOps/AI Engineer, or have built or worked on real projects, you’re probably tired of the hype around AI, and that’s a valid feeling that many people share. Maybe a few years ago, when a new model or something with Agents in it was announced, you became curious and excited.

Nowadays, you just default to what’s proven to work, and pay way less attention to the new shiny things that end up not delivering.

Speaking of shiny things, let’s walk through a few examples, directly correlated with the Hype-Cycle chart:

Rabbit R1 (Inflated Expectations) - the agentic device released in January 2024, marketed as one that could execute actions across apps, thanks to the Large Action Model (LAM). In reality, brittle UI automation.

Devin (Peak → Trough) - the first AI Software Engineer, making SW Engineers worry about their jobs. In reality, it's expensive and loses context on real projects and complex codebases.

Autonomous Agents (Trough of Disillusionment) - advertising fully agentic AI, replacing jobs, automating everything, or saving 90% costs. In reality, most projects rolled back to human-in-the-loop and workflows.

That circles back to the points raised in the sections above. The engineering effort is moving slowly but steadily, as many companies may have built their first PoC and Pilot projects, but hit a wall on scaling and bringing solutions into production.

What should you focus on?

At this point, the question isn’t whether AI will matter - it already does.

The real one is where to invest your time, when most tools, models, and trends won’t make it to production, or introduce tech debt and have teams port out and migrate to either in-house built tools or other ones entirely.

That’s why I structure this section into three pillars: Foundations, Engineering, and Systems.

It closely resembles the new direction I’m taking with this publication, moving out from doing deep walkthroughs on various, unstructured topics to structured, ladder-like paths - something I’ve been planning on since October.

I’m intentionally not listing dozens of resources. People like structured lists. They bookmark them. They rarely finish them.

Instead of outlining 50+ links, I’ve limited each topic to two or three high-quality resources that I consistently read and go through. I don’t want to create another roadmap, but a short, actionable path you can actually follow.

My recommendation is that you pick one resource out of all listed, one for each pillar, and study it for a few months.

Now let’s get to the part you’ve been expecting.

#1 AI Foundations

There’s this misconception that only AI Research engineers, or the ones building efficient GPU Kernels, training Foundation Models, or working at the bleeding edge of AI, must master the foundations.

That’s not true. Foundations show up for everyone the moment you ship AI to real users.

Even if you don’t need to master distributed training, large-scale data patterns, and GPU topologies, that doesn’t mean understanding Context Length, Sampling Parameters, Inference, Quantization, or the basic math behind AI doesn’t matter for you.

I’m intentionally focusing less on Machine Learning as you’ll pick up a lot of ML concepts naturally while studying deep learning.

But in case you want a list of the ML fundamentals you should consciously cover:

#1.0 Machine Learning

Supervised vs unsupervised learning

Overfitting / underfitting / Regularization (L1, L2)

Train / validation / test splits

Gradient descent (conceptually and mathematically)

All of these concepts are covered in Hundred Page Machine Learning, A.Burkov.

#1.1 Mathematics

You don’t need to derive gradients by hand; it’s enough to get the basic intuition for linear algebra, probability, and optimization to reason about model behavior.

3Blue1Brown YouTube Channel, Grant Sanderson

Most probably, you’ve already seen videos from 3B1B. In case you haven’t, the step-by-step animations help you understand the logic behind AI.

The Palindrome Newsletter, Tivadar Danka

Frankly, one of the best and most up-to-date resources on Math behind AI/ML. Tivadar does a great job explaining complex concepts with simple terms.

Don’t spend too much time on maths, understand the core principles is enough.

#1.2 Deep Learning

The term Deep Learning might have grown out of fashion, being replaced by the general AI or GenAI term. But for someone working in the AI field, Deep Learning is the foundation on top of which Language Models, Vision Models, and Generative AI are built.

Two resources to get started with Deep Learning:

Introduction to Deep Learning, MIT 2025 Playlist.

Neural Networks, model training, distillation, optimization, and finetuning - all of these live under the Deep Learning umbrella. This MIT Playlist is a great up-to-date resource on understanding what Deep Learning is.

NVIDIA Deep Learning Institute

A rich collection of industry-relevant technical trainings from NVIDIA teaches the broader spectrum of DL.

If you want to understand Generative AI, LLMs and Diffusion, you must understand the core components of Deep Learning first.

#1.3 Generative AI

This is where most of the AI industry keeps an eye on, and most engineers operate today. At the same time, it’s where misunderstandings are common.

Most resources listed will focus on Language Modeling:

Large Language Models, Stanford CME 295 Playlist

If you’re somewhat experienced, take Lectures 5, 7, 8, and 9. For beginners, add 1 and 2 to the list. For everyone, watch the CS25 V5 lecture from Josh Batson at Anthropic.

Language Modeling from Scratch, Stanford CS336 Playlist

These explain everything around LLMs, the architectures, how they’re trained, why scaling works, and where it breaks down.

A few good books on the topic (pick one):

LLMs from Scratch, Sebastian Raschka

If you want a step-by-step walkthrough on building an LLM from scratch with Pytorch.Hands-On Large Language Models: Language Understanding and Generation, J.Alammar and M.Grootendorst

A real deep dive on everything about LLMs, from NLP (Natural Language Processing) to understanding newer architectures, training.Generative Deep Learning, David Foster

I recommend this one, as apart from Language Models, it also covers the multimodal segment, models that can generate Audio, Image, and Video.

For minimal investment, look into understanding how LLMs work, and how to evaluate LLM-based systems.

#1.4 LLM-based Systems

Agents and Agentic Workflows are becoming more and more popular. However, it is still a field surrounded by hyped claims. Fully autonomous systems still require a lot more work, or as A.Karpathy put it “We’re in the decade of Agents, not the year of Agents”.

You don’t need 100 resources on Agents and Workflows, you only need this one.

Agentic Design Patterns, A.Gulli

This is a Free, 400 Pages booklet published by a Google Sr Dir and Distinguished Engineer, covering everything you need to know about Agents, Workflows, Memory and Agency.

I’m at Chapter 20, close to finishing it, but surely will read it again shortly as it’s information dense, hands-down a great resource that gathers everything in one place.

#2 Engineering

Any engineer from the pre-2023 (ChatGPT) era will agree with this.

There’s another misconception that’s quite popular in the AI field, and I think few people actually address it, to clear the hype.

“AI engineers don’t really need strong software engineering skills - agents can handle most of the code.”

AI-assisted coding doesn’t replace engineering - it exposes it. When everyone can write code, quality comes from thinking clearly: understanding the problem, designing the system and its intricacies, and only then writing the implementation.

If you want to build solid AI Systems that reach production, you need to be a good software engineer first.

I’m going to split the resources into 2 pillars: Architecture and Programming.

#2.1 Architecture

This is less about templates to structure your projects and more about building robust codebases that are easy to evolve and maintain.

Clean Architecture, R.C.Martin

Even if published a decade ago, it still stays strong as it solves major software development pain points by isolating business logic from technical details (UI, database, frameworks).Fundamentals of Software Architecture, M.Richards and N.Ford

Goes through architectural patterns (monoliths, microservices, event-driven).

You don’t need to know everything: DDD, Clean Architecture or Vertical Slice. But you must understand basics of Monoliths, Microservices, Event Driven Architectures.

#2.2 Programming

This depends on your primary stack, but the principle is universal.

Even if you’re using AI agents like Claude or Codex to write code faster, all you’re really doing is amplifying whatever quality already exists. If your code is hard to read, hard to test, or full of hidden side effects, AI will scale those problems - not fix them.

As this becomes the default, the work will shift away from writing code toward reading, reviewing code, and making speed matter less than clarity.

You don’t need to know every language. Python is enough.

Effective Python, Brett Slatkin

A great practical guide to writing clear, idiomatic, and maintainable Python.Fluent Python, Luciano Ramalho

Extensive, going through Python's core language features and libraries.

If you’re already programming in Python, study the Effective Python book. If you’re new to Python, skim through Fluent Python, then Effective Python.

These are large books, best way to get value out of them is to jump to the required chapter, skim through the pages, and gather notes.

(Bonus) Learning Go is another long-term safe bet in AI/ML.

Effective Go, go.dev

If you’re already familiar with Python and want to pick up another language that’s close to Python, Go is the best candidate.Learning Go, Jon Bodner

A deeper walkthrough on Go's idioms, and how to avoid recreating patterns that don't make sense in a Go context.

I’ve been programming in Go for the past 6 months, and I love it. I can finally understand the Ollama codebase.

#3 AI Systems

System Design is mandatory; every tech interview will have a System Design part.

Good design translates into stable software, or at least software that’s easier to evolve. Past the architecture and past the code that implements features, software must scale, be easier to maintain, and be resilient.

With AI, this becomes harder.

We introduce non-determinism into software, which means assumptions break more often and in less obvious ways. The outputs will vary, costs fluctuate as AI Systems require way more compute than traditional ones, and designing a system right from the get-go is more important than building it.

Best guides and books I follow and read on System Design:

ByteByteGo, A.Xiu

One of the most popular and best resources on understanding how large systems running at scale in production are designed and built.Designing Data-Intensive Applications (DDIA), M.Kleppmann

Not focusing on AI per se, but it’s a great resource to understand how real systems behave under load and scale.System Design Playbook

Massive thanks to Neo Kim for compiling it and making it free.

For AI Systems specifically, I recommend:

AI Engineer (YouTube)

One of the few places that discusses AI application design from a systems and production perspective, a lot of great talks were uploaded recently.AI Engineering, Chip Huyen

This book needs no introduction. I can describe it as an extensive zero-shot introduction to AI Engineering; it’s not too technical, but packed with details.

What you need is not more resources to bookmark, but one or two solid entrypoints on all these pillars.

The next step is to apply these learnings in your own work, and that’s where I’m helping with The AI Merge.

Closing Thoughts

My goal with this article was to gain an overview of the industry's current state, analyzing the 2025 industry reports from Gartner, MIT, and Boston Consulting Group.

All of them outline the same ideas: The current gap in AI Systems is execution.

Building demos is easy; morphing them into a system and deploying in production is a different game.

The three pillars I’ve outlined, Foundations, Engineering, and Systems, aren’t just theoretical; they construct an efficient bottom-up learning plan that focuses more on the Engineering side of things rather than on the surface-level AI features.

At the end of the day, I believe the best AI Engineers are Software Engineers at their core, people who’ve built the right mental models, understanding how software works, how to build and scale it, how systems work, and how to design one.

This knowledge ports easily to AI Systems.

That’s the direction this publication, and the learning paths behind it, will continue to follow.

Would love to hear your thoughts 💬

What’s your take on the new Knowledge Pillars paths - Foundations, Engineering, and Systems?

If you’re an engineer, how do you see this execution gap actually closing?

References:

[1] AI Engineer. (n.d.). AI Engineer. https://www.ai.engineer/

[2] AI Engineering: Building Applications with Foundation Models, Huyen Chip (2026). Amazon.com. https://www.amazon.com/AI-Engineering-Building-Applications-Foundation-ebook/dp/B0DPLNK9GN

[3] O’Reilly Media. (n.d.). Designing Data-Intensive Applications. https://www.oreilly.com/library/view/designing-data-intensive-applications/9781491903063/

[4] NVIDIA. (n.d.). Training and Certification. https://www.nvidia.com/en-us/training/

[5] Danka, T. (n.d.). The Palindrome Newsletter.

[6] Martin, R. C. (n.d.). Clean Architecture: A Craftsman’s Guide to Software Structure and Design. https://www.amazon.com/Clean-Architecture-Craftsmans-Software-Structure/dp/0134494164

[7] Stanford University Human-Centered AI. (n.d.). 2025 AI Index Report: Technical Performance. https://hai.stanford.edu/ai-index/2025-ai-index-report/technical-performance

[8] Boston Consulting Group. (n.d.). The Widening AI Value Gap. https://media-publications.bcg.com/The-Widening-AI-Value-Gap-Sept-2025.pdf

[9]Gartner. (n.d.). Gartner Hype Cycle Identifies Top AI Innovations in 2025. https://www.gartner.com/en/newsroom/press-releases/2025-08-05-gartner-hype-cycle-identifies-top-ai-innovations-in-2025

[10] MIT. (n.d.). State of AI in Business 2025 Report. https://mlq.ai/media/quarterly_decks/v0.1_State_of_AI_in_Business_2025_Report.pdf

[11] Cleanlab. (2025). AI agents in production: 2025 report. https://cleanlab.ai/ai-agents-in-production-2025/

[12] Thompson, A. D. (2023, February 25). Models Table (10,000+ LLM data points). Dr Alan D. Thompson – LifeArchitect.ai. https://lifearchitect.ai/models-table/

[13] McKinsey & Company. (2025, November 5). The state of AI: Agents, innovation, and transformation. McKinsey & Company. https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

Images

Images 2,3,4 were taken from the Boston Consulting Group and Gartner’s State of AI 2025 reports.

This article nails an important shift: as models become commoditized, engineering rigor becomes the real differentiator. Mastering system design, observability, and end‑to‑end reliability will separate the teams that succeed in production from those that stay in pilots. It's refreshing to see emphasis placed on practical delivery over chasing every new model.

Really interesting framing. The smartest bets aren’t just on models, they’re on infrastructure, workflows, and where value actually accumulates